Building An Ethical AI Future Through XAI Financing – Analysis

By Observer Research Foundation

By Amoha Basrur and Sahil Deo

The rapid rise of artificial intelligence (AI) and machine learning (ML) across various sectors has led to human decision-making being progressively replaced by data-fed algorithms. With 42 percent of companies around the world reporting their exploration of AI adoption in 2022, algorithms have more power over our everyday lives than ever before.

The adoption of automated processing means faster and more efficient decisions that can transform outcome accuracy while lowering costs. But these advantages are associated with serious concerns over biases and consumer harms. Training data that is incomplete, unrepresentative, or has historical biases reflected in it will lead to an algorithm that reproduces the same patterns. An experiment by AlgorithmWatch showed that Google Vision Cloud labeled an image of a dark-skinned individual holding a thermometer “gun” while a similar image with a light-skinned individual was labeled “electronic device.” They theorised that because dark-skinned individuals were likely featured more often in scenes depicting violence in the training data set, the automatic inference made by the computer was to associate dark skin with violence. Errors like this have serious real-world consequences when technologies are applied for purposes such as weapon recognition tools in public spaces.

Recognition of the need to prevent algorithms from amplifying historical biases or introducing new ones into the decision-making matrix led to a surge in action toward Responsible AI (RAI). BigTech, most notably Google, Microsoft, and IBM, led the way in creating internal RAI principles. While the specifics of principles adopted by companies vary, they broadly include fairness, transparency, and explainability, privacy, and security. But the absence of standard principles has led to significant flexibility and reliance on self-regulation1. Smaller companies face greater challenges in navigating these spaces. Studies have shown that nearly 90 percent of companies (85 percent in India) have encountered challenges in adopting ethical AI. There is a need for external support to promote and simplify ethics in AI across industries.

One of the most direct ways to prevent consumer harms and facilitate the aforementioned principles is by building accountability into systems through explainability-by-design approaches in AI. Explainability refers to the ability to provide clear, understandable, and justifiable reasons for an algorithm’s decisions or actions. This includes system functionality (the logic behind the general operation of the automated system) and specific decisions (rationale of particular decisions) which allows creators, users, and regulators to determine whether the decisions taken are fair.

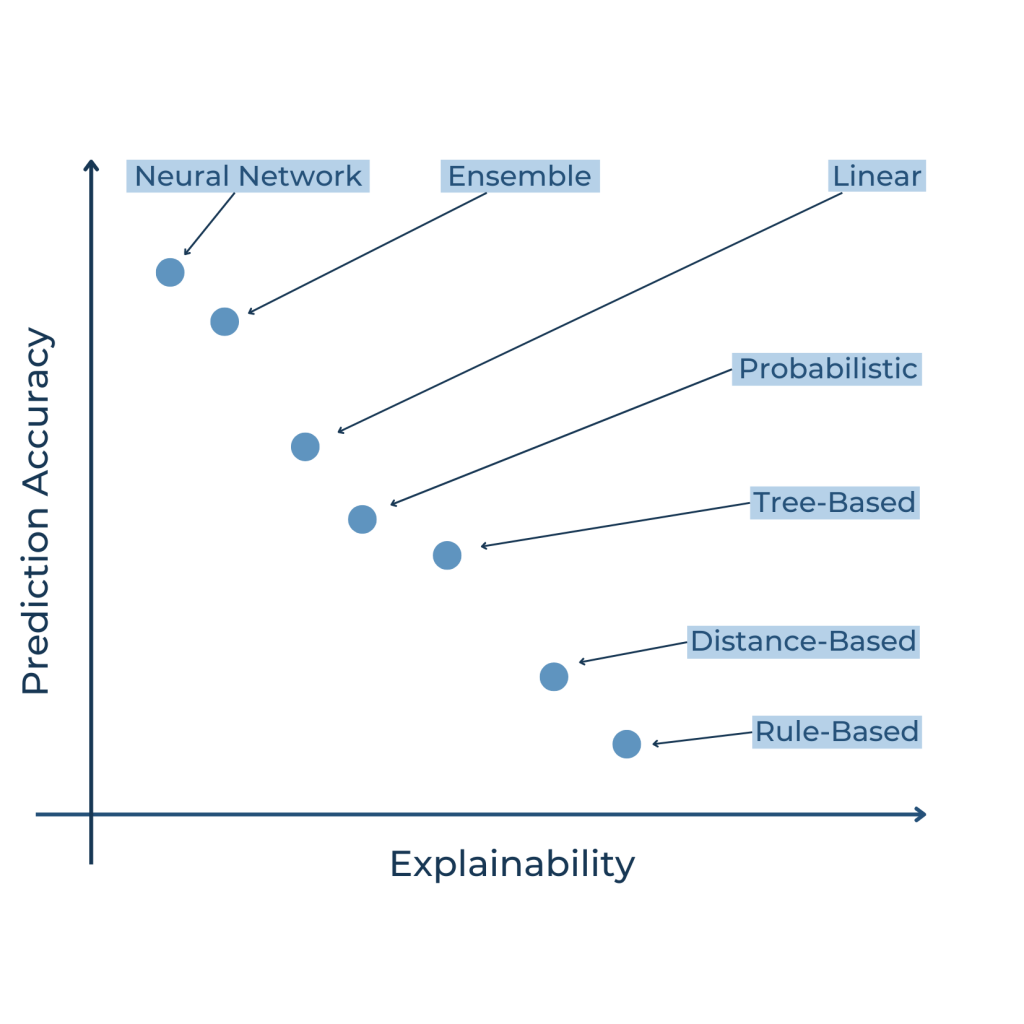

Although explainability is at the heart of creating ethical AI, it is a particularly tricky standard because it comes with several strings and hidden costs attached. The main concern is that requiring explainability hinders the performance of an algorithm. Advanced machine learning models can achieve high accuracy by learning complex, non-linear relationships between the input data and the output predictions. However, these “black box” models can be difficult to interpret to ascertain the validity of their decisions2. Shifting to a more explainable model would involve a tradeoff with the accuracy of the model’s results. This inverse relationship is a significant challenge to efforts at mainstreaming explainability.

Along with the loss of efficiency, compliance may have further implications for companies. This includes the costs of providing requested clarifications to individuals, and the potential conflict of providing explanations with trade secrets and intellectual property3. This may disproportionately impact Micro, Small and Medium Enterprises (MSMEs) and start-ups, vis-à-vis large corporations that have greater resources to adapt to new regulations. Mandating explainability would be an additional compliance burden in a nascent industry.

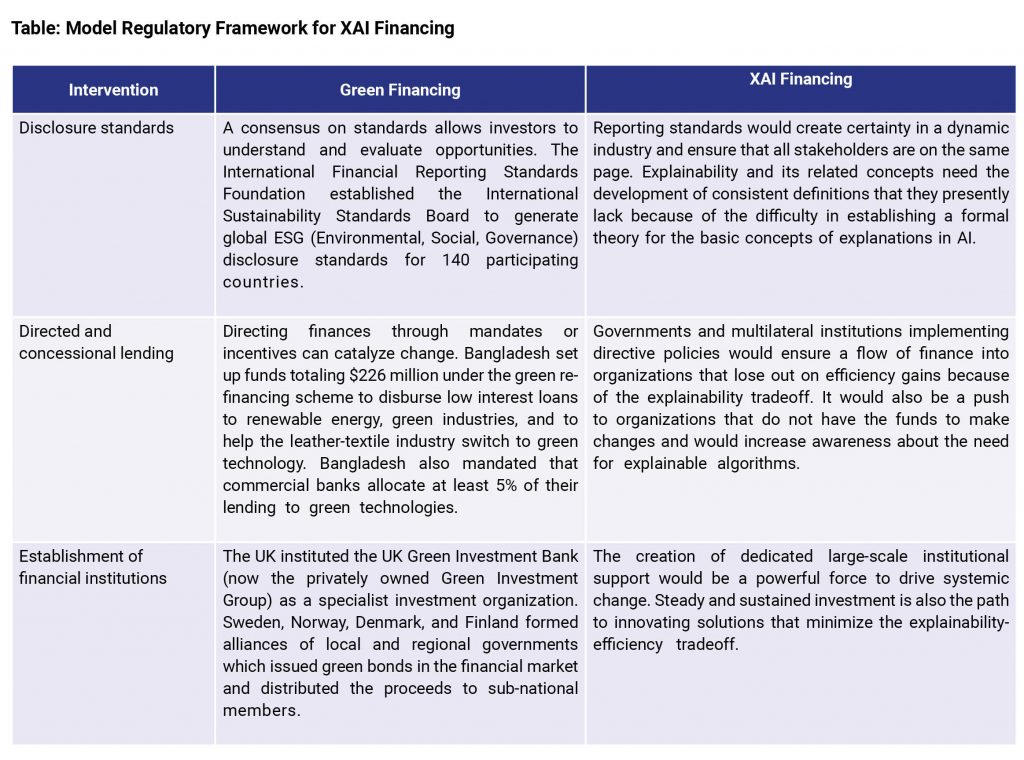

The tradeoff between performance and ethics is not a new one. Investors have long recognised that environmental protection may come at the cost of economic output. With the rise in environmental awareness came an increase in mindful investment. Till the 1990s, investors were more focused on simply avoiding the most egregious polluters, but they soon began to actively look for companies that consciously managed their impact. Green finance was developed to increase the level of financial flows to organizations with sustainable development priorities. It is central to the discussion on the sustainability of economic growth because it promotes climate-friendly practices while also seeking financial returns. Green finance is a way for the State and public to signal their priorities to the market, and also to compensate firms for the profits they forgo by not indulging in extractive operations.

Explainability involves a similar negotiation between a firm’s economic interests and its larger impact on society. To ensure the safety of AI systems in the long term, firms may have to compromise on profits and pay compliance costs in the short term. Like Green Bonds or ESG Funds, explainable AI (XAI) can be promoted through dedicated financial tools to support companies in overcoming the costs of creating explainabilty to reach the goal of ethical and responsible AI. The initiation and success of XAI funding would require an appropriate regulatory framework, that can be modeled after the framework for green finance4.

With formidable growth in the power and scope of AI, ethics through explainability needs to be at the forefront of conversations about innovation. Although there is a developing convergence in the standards of algorithm assessment, the existing legal doctrines are poorly equipped to tackle algorithmic decision making5. In the lacking regulatory environment of the day, the demand for transparency must come from the public6. Changing the status quo will need organised and purposeful funding to shift the priorities of the industry. Given the dynamism of the digital economy, a culture of explainability needs to be set up in the early days of the industry. If not, there may be an irreversible trend in the direction of setting up high-performance algorithms that cannot meet their ethical obligations7.

References

[1] Deo, S. (2021). The Under-appreciated Regulatory Challenges posed by Algorithms in FinTech. Hertie School.

[2] Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2021, 23, 18. https://dx.doi.org/10.3390/e23010018

[3] Wachter, S., Mittelstadt, B., & Floridi, L. (2017). Why a Right to Explanation of Automated Decision-Making Does Not Exist in the General Data Protection Regulation. International Data Privacy Law, 7(2).

[4] Ghosh, Nath, & Ranjan. (2021, January). Green Finance in India: Progress and Challenges. RBI Bulletin.

[5] Gillis, T., & Spiess, J. (2019). Big Data and Discrimination. The University of Chicago Law Review, 86(2).

[6] Pasquale, F. (2016). Black Box Society. Harvard University Press.

[7] Deo, S. (2021). The Under-appreciated Regulatory Challenges posed by Algorithms in FinTech. Hertie School.