Web Surfing That Feels Instantaneous, Even Though It’s Not

If the coronavirus pandemic drove your life online, you’ve probably been there:

Maybe you’re using video chat to get work done or connect with far-flung friends. No matter how much bandwidth you have, the lag between one person speaking and the rest hearing the words means you keep talking over each other.

It’s the annoying delay when you hit play on your streaming service, and your movie or TV show takes forever to load. Or when you’re about to reach a new level in a video game, but your controller doesn’t register your moves in time.

That fraction of a second — less than the blink of an eye — is called network latency. It’s how long it takes in milliseconds for a signal to make a round-trip from your computer to a server and back. The greater the distance, the longer it takes to make the trip.

Milliseconds may not seem like a big deal. But on the internet it can feel like wading through mud. It can even cause users to lose interest or take their business elsewhere.

Google found that if search results are slowed down by four tenths of a second, their users do eight million fewer searches a day. And according to Amazon, even smaller delays — an extra tenth of a second — would cost the company 1% in sales, a staggering hundreds of millions of dollars a year.

Duke computer scientist Bruce Maggs thinks we can do better. A few years ago, he and colleagues decided to take on a challenge: building a speed-of-light internet.

If they could get data to and fro more quickly, they wondered, could they make web surfing feel smoother and more seamless, even instantaneous?

The team presented their approach on April 6 at the 19th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’22) in Renton, Washington.

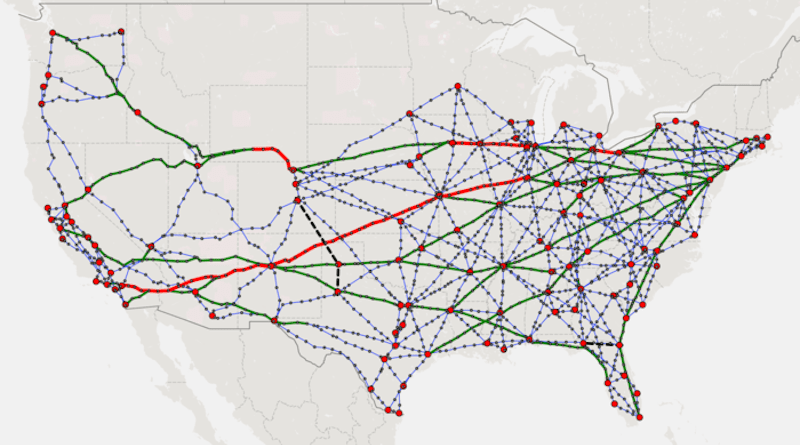

In a project co-led with Brighten Godfrey at the University of Illinois, Gregory Laughlin at Yale and Ankit Singla at ETH Zurich, they envision a network stretching across the U.S. that responds 10 to 100 times faster than the normal internet.

If deployed in the 120 largest cities, it could give 85% of Americans the option of connecting over vast distances in near real-time, as if they were in the same room.

The problem is that today’s internet isn’t optimized for speed, Maggs said.

Given the speed of light, data should theoretically be able to transmit at a top clip of 300,000 kilometers per second. That’s a mind-boggling 670 million miles an hour.

At that pace, internet traffic should be able to cover the 2,800 miles between Los Angeles and New York City in 15 milliseconds.

But the reality is much slower. Maggs and his team showed that moving even small amounts of data over the internet — say, just downloading a webpage — often takes between 37 to 100 times longer than you’d expect.

“It should be faster, right?” Maggs said.

A big culprit behind the delay, they say, is the way internet traffic is routed.

Every time you check email, search for information on Google, or scroll through social media, data is being sent and received through hundreds of thousands of miles of buried fiber optic cables, thin strands of glass that are bundled together and transmit data as pulses of light.

But this system can be inefficient. Buried cables have to zigzag around mountains and squiggle their way across the landscape as they follow roads and railways, the twists and turns costing precious time.

Internet traffic traveling from Sweden to Croatia — a driving distance of about 1,300 miles — might take an 8,000-mile oceanic detour through a router in New York City first.

“Fiber paths almost never follow a straight line between two locations,” Maggs said.

Add to that the fact that internet providers often route data along the path that is the cheapest, not the fastest, even backtracking to save money.

“That means the path will be more roundabout,” Maggs said.

There’s another thing that gets in the way. When people talk about the speed of light, they usually mean the speed of light in a vacuum. When light passes through a medium other than a vacuum — such as air, water, or glass — it slows down.

The speed of light in a vacuum is 300,000 kilometers per second, but it slows down to two thirds that speed in silica glass, which is what normal optic fiber cables are made of.

“These things all compound and multiply each other,” Maggs said. Which is why — when it comes to response time on the information superhighway — the cosmic speed limit is far from a reality. But the researchers found that one tiny corner of it gets close:

In early 2010s, a custom-built network was set up to shave a few thousandths of a second off the time it takes for financial traders to send data back and forth between the Chicago Mercantile Exchange and stock exchanges in New Jersey, a roughly 700-mile route traversing six states.

That amount of time is imperceptible to humans. But it’s enough to give trading firms an edge over their rivals in the stock market, where receiving data even a millisecond sooner can make the difference between making a profit or losing out.

By analyzing this specialized network, the team wondered if they could use similar approaches to reduce internet delays nationwide.

Part of the trading network’s advantage lies in how data is transported, Maggs said.

Instead of buried cables, it cuts trading times by using microwave radio transmissions to carry data through the air, where signals can travel 50% faster than light through fiber.

The network also saves time with a shortcut. Unlike fiber cables, which have to wind their way about obstacles as they follow the lay of the land, microwave signals don’t bend — they’re transmitted in a straight line. That makes for shorter routes, allowing them zip back and forth between New Jersey and the Windy City in eight thousandths of a second.

In tests, the researchers found that the financial traders’ microwave-based network was reliably faster than fiber even in bad weather, when rain can weaken the signal between towers.

The technology is decades old. The team’s research showed that something similar — towers equipped with antennas that send microwave signals to and fro — could be built among the biggest cities in the U.S. or Europe, and could shrink lag to within 5% of what’s possible at light speed.

Assuming a hypothetical budget of 3,000 towers spaced roughly 40 to 60 miles apart, the team figured out the best way to beam signals from tower to tower along the shortest possible paths, and without things like hills or buildings or trees getting in the way.

Just routing data along straighter, more direct routes, they find, would make it possible to click on a link to a website and get the request to a server three times faster.

And at an estimated cost of 81 cents per gigabyte, the potential value of such a network would greatly exceed its price tag. The team’s cost-benefit analyses suggest that, for a company like Google, delivering search results a mere 200 milliseconds faster would mean an additional $87 million a year in profits.

But what’s most exciting about this, the researchers say, is the potential for people separated by hundreds of miles to feel like they’re interacting and sharing information online in real-time.

Studies suggest that delays less than 30 milliseconds go by too fast for most people to notice. If scientists could shrink lag times to less than that, they could make them virtually imperceptible.

“We’re not saying we can make the whole internet operate at the speed of light,” Maggs said. That’s because, while microwave transmission may be faster than fiber, in terms of carrying capacity it can’t compete.

But for applications where timing is everything, such a network could reduce lag at lower costs than existing services.

For online gamers, who are constantly sending and receiving data to play together in real-time, routing traffic through their network could shrink lag times to a third of what’s possible with today’s internet.

In the case of his musician wife, Maggs said, she and her colleagues on the West Coast could play together in sync over the internet and hear each other as if they were in the same concert hall, without noticing a delay.

“It would be kind of like deciding when to use the U.S. Post Office and when to use Federal Express,” Maggs said. “There’s a huge difference in cost and performance. You’d have to pick just the things where the latency actually mattered.”

If you’re just streaming movies you might not care. But for Maggs, who used to play multiplayer online games as a kid, “when I’m sending my command to the gaming server, where I’ve just pressed F to fire my phasers, I want that to go as fast as I possibly can.”