Human-Like Features In Robot Behavior: Response Time Variability Can Be Perceived As Human-Like

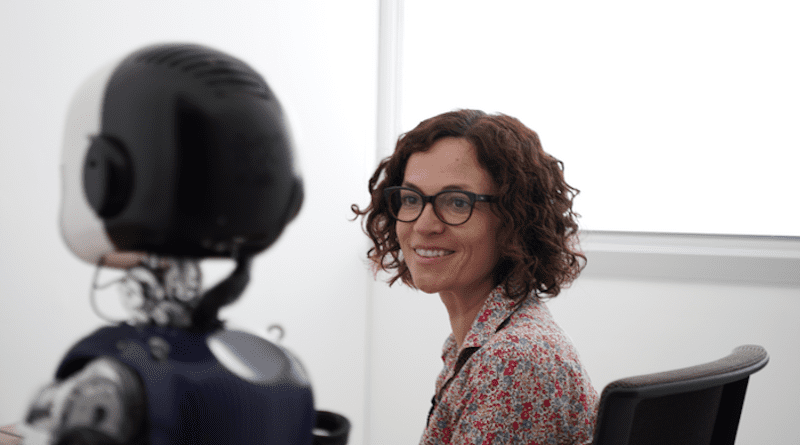

Humans behave and act in a way that other humans can recognize as human-like. If humanness has specific features, is it possible to replicate these features on a machine like a robot? Researchers at IIT-Istituto Italiano di Tecnologia (Italian Institute of Technology) tried to answer that question by implementing a non-verbal Turing test in a human-robot interaction task. They involved human participants and the humanoid robot iCub in a joint action experiment. What they found is that specific features of human behavior, namely response timing, can be translated into the robot in a way that humans cannot distinguish whether they are interacting with a person or a machine.

The study has been published in Science Robotics journal today and is the first step to understanding what kind of behaviour robots could exhibit in the future, considering the various possible fields of application – such as healthcare or manufacturing production line.

The research group is coordinated by Agnieszka Wykowska, head of IIT’s “Social Cognition in Human-Robot Interaction” lab in Genova, and grantee of the European Research Council (ERC) for the project titled “InStance”, which addresses the question of when and under what conditions people treat robots as intentional agents.

“The most exciting result of our study is that the human brain has sensitivity to extremely subtle behaviour which manifests humanness” – comments Agnieszka Wykowska. “In our non-verbal Turing test, human participants had to judge whether they were interacting with a machine or a person, by considering only the timing of button presses during a joint action task”.

The research group focused on two basic features of human behaviour: time and accuracy when responding to external stimuli, characteristics that they had previously mapped to obtaining an average human profile. Researchers used this profile to build their experiment where participants were asked to respond to visual stimuli on a screen. Participants played the game divided into two human-robot couples: a person teamed up with a robot, whose response was controlled by the person from the other couple or in a pre-programmed way.

“In our experiment, we pre-programmed the robot by slightly varying the average human response profile”, explains Francesca Ciardo, first author of the study and Marie Sklodowska-Curie fellow at Wykowska’s group in Genova. “In this way, the possible robot responses were of two types: on one hand, it was fully human-like since it was controlled by a person remotely, on the other hand, it was lacking some human-like features because it was pre-programmed”.

The results showed that people interacting with the robot were not able to tell whether the robot was human-controlled or pre-programmed in the condition when the robot was in fact pre-programmed. This suggests that the robot passed this version of the non-verbal Turing test in this specific task.

“The next steps of scientific investigation would be to design a more complex behaviour on the robot, to have a more elaborate interaction with the humans and see what specific parameters of that interaction are perceived as human-like or mechanical” concludes Wykowska.