Global Conspiracy Theory Attacks – Analysis

Security challenge: As local news media deteriorate, conspiracy theories, crafted to incite fear and tarnish achievements, flourish online.

By Tom Ascott*

The internet has given conspiracy theories a global platform. While traditional local news media deteriorate, the borders for online communities are broadening, offering weird beliefs that pose political, security and economic implications.

Conspiracy theories are common, and all countries struggle with them. In Poland the 2010 plane crash in Smolensk that killed the former president became fodder for conspiracy theories. And on the 50th anniversary of the Apollo 11 moon landing, NASA contended with accusations that the moonlanding was filmed on a soundstage, the earth is flat and the moon is a hologram. Spain’s fact-checking site Newtral set out to fight the mistruths.

As some people find social interactions more challenging, online platforms provide outlets for expression. A vicious cycle develops: As people spend more time online, they find personal interactions more challenging and experience social anxiety, prompting more online interactions. The preference to communicate through technology on its own might not be a problem, but can deter the ability to form communities in real life.

Some individuals struggle to form communities because they harbor politically incorrect thoughts and meet resistance. Yet racist, sexist, homophobic and alt-right communities thrive online. Such communities might be small and inconsequential in any one geographic area, but the internet presents a border-free world, allowing niche, politically incorrect views to thrive. As a result, politically incorrect views become less niche. The Centre for Research in the Arts, Social Sciences and Humanities estimates 60 percent of Britons believe in a conspiracy theory. In France, it’s 79 percent.

Conspiracy theorists and anti-establishment alt-right groups are not distinct, and an investigation by Aric Toler for Bellingcat, the investigative journalism website, suggests the two camps share vocabulary. Embracing conspiracy theories goes along with “rejecting all political and scientific authority, thus changing your entire worldview.”

Rejection of basic and institutional truths contributes to an individual’s vulnerability to radicalization and a rise in extremist views. Internet platforms, whether social media giants like YouTube or Twitter or small online magazines, thrive on clicks and engagement, and operators are keenly aware that outrageous comments or conspiracy theories drive engagement. Bloomberg recently implied that YouTube is aware of the radicalizing effect of video algorithms, offering related content that reinforces users’ views. It is a Pyrrhic success, though, as content fuels disillusionment, frustration and anger.

Algorithms are designed to keep users on the site as long as possible. So, if users search for content opposing vaccinations, YouTube continues serving more anti-vaccination content. A Wellcome study has shown residents of high-income countries report the lowest confidence in vaccinations. France reports the lowest level of trust, with 33 percent reporting they “do not believe that vaccines are safe.”

Such reinforcement algorithms can challenge core democratic ideals, like freedom of speech, by deliberately undermining the marketplace of ideas. The belief underpinning free speech is that truth surfaces through transparent discourse that identifies and counters maliciously false information. Yet the notion of automatic algorithms contribute to a situation that every view is valid and carries equal weight, culminating in the “death of expertise.” When it comes to complex, technical and specialist subjects, everyone’s view is not equally valid.

There is nothing wrong with challenging democratic ideals in an open and earnest debate — this is how democracy evolves. Women won the right to vote through open and free discourse. But posts designed to undermine the marketplace of ideas challenge nations’ ontological security – or the security of a state’s own self-conception. If pushed too far, commonly accepted ideas that are held to be true –the world is round; science, democracy and education are good – could start to collapse and jeopardize national security.

The Cambridge Analytica scandal showed that political messaging could be made more effective by targeting smaller cohorts of people categorized into personality groups. Once platforms and their clients have this information, Jack Clark, head of policy at OpenAI, warns that “Governments can start to create campaigns that target individuals.” Campaigns, relying on machine learning, could optimize ongoing and expanding propaganda seen only by select groups. Some conspiracy theories are self-selecting, with users seeking out the details they want to see. This contrasts with targeted misinformation that typically indicates information warfare.

Propaganda campaigns need not be entirely fictitious and can represent partial reality. It is possible to launch a conspiracy theory using real resources: Videos showing only one angle of events can be purported to show the whole story, or real quotes can be misattributed or taken out of context. The most tenacious conspiracy theories reflect some aspect of reality, often using videos showing a misleading series of events, making these much harder to disprove with other media.

In authoritarian countries, with less reliable repositories of institutional data, fact checking is difficult. In Venezuela, open data sources are being closed, and websites that debunk false news and conspiracy theories are blocked. This problem is not new, stemming from the same dictatorial philosophy that leads regimes to imprison journalists or shut down public media stations.

Video is becoming more unstable as a medium for truth, with deepfakes, videos manipulated by deep-learning algorithms, allowing for “rapid and widespread diffusion” and new evidence for conspiracy theories. Creators churn out products with one person’s face convincingly placed over a second’s person’s face, spouting a third person’s words. There is an ongoing arms race between the effectiveness of these tools and the forensic methods that detect manipulation.

Content still can be fact-checked by providers or users, though this is a slow process. Once a theory or video goes online, the debunking often doesn’t matter if viewers are determined to stand by their views. Users can post content on Facebook, YouTube, Twitter and other social media without fact-checking, though Facebook has come under fire for allowing political advertisements to make false claims. Twitter avoids the issue by banning political ads that mention specific candidates or bills.

Conspiracy theorists are also adept at repackaging. For example, any tales related to the rollout of 5G are “rehashed from 4G.” Likewise, the Notre Dame fire quickly produced anti-Semitic or anti-Islamic theories. Conspiracy theorists link new theories to old ones. In the minds of conspiracy theorists, despite evidence to the contrary, such connections give greater weight to the new theory as continuation of an established idea they have already accepted as true.

A government can refute conspiracy theories to prevent, as in the case of Notre Dame, anti-Semitic sentiment. As trust in government and politicians declines, the ability to fight rumors falls. Marley Morris describes the cycle for Counterpoint: “low levels of trust in politicians can cause people to resort to conspiracy theories for their answers and in turn conspiracy theories construct alternative narratives that make politicians even less likely to be believed.”

James Allworth, head of innovation at Cloudflare, proposes banning algorithmic recommendations or prioritization of results for user-generated content. Policy ideas like this as well as internal regulations such as Instagram masking “likes” in six countries, and then globally, indicate appetite for industry change.

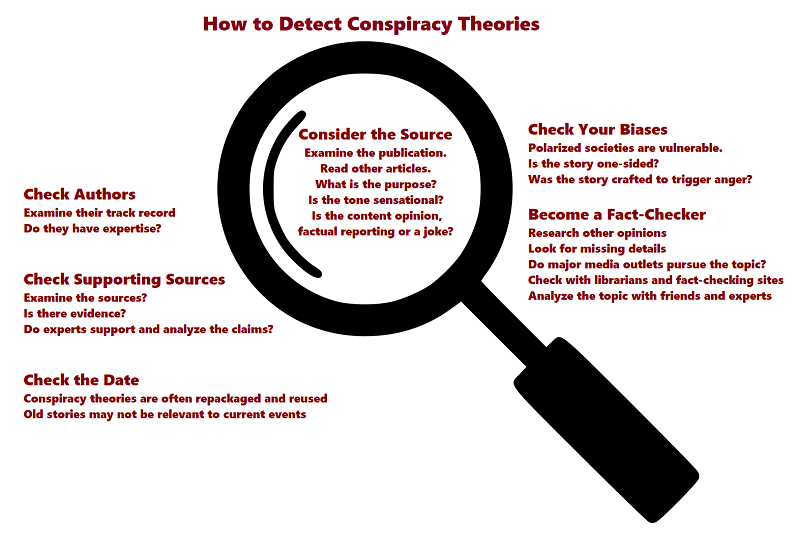

There are solutions at the individual level, too, including deputizing users to flag and report false or misleading content. The paradox is that users reporting problem content are not typical viewers or believers. It’s human nature to be curious about controversial content and engage. And unfortunately, according to Cunningham’s law, “the best way to get the right answer on the internet is not to ask a question; it’s to post the wrong answer.”

*Tom Ascott is the digital communications manager at the Royal United Services Institute for Defence and Security Studies.